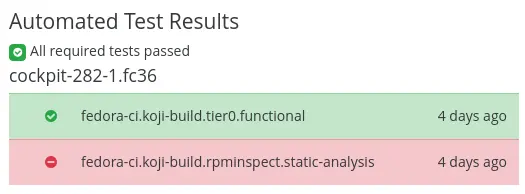

For the last quarter I have worked in Red Hat’s Testing Farm (TFT) and “Operating System CI” (OSCI) and teams, on a temporary rotation. TFT develops and runs the Testing Farm (TF) infrastructure, an API which you tell “go run a test with $these parameters, it allocates a bunch of cloud instances, sets them up, runs your test, and returns the result. OSCI builds upon this to implement Fedora’s and RHEL’s gating tests for package updates, image builds, upgrades, and so on. Everytime you look at Fedora’s bodhi and see these, you are looking at results provided by these teams:

As Cockpit and Fedora developer, I usually sit on the receiving end of these tests. My colleagues and I often found them hard to understand, investigate, and reproduce. So I took another chance to do a work rotation to improve them myself, get to know more colleagues, understand their problems and perspectives, help with their problems of the day, and learn something new.

New TF results viewer

My first exercise was to replace TF’s results viewer. It was based on an outdated fork of xunit-viewer, which came with several drawbacks: It was hard to extend (you could not even add clickable links to artifacts!), unwieldy to use/deploy (you need npm install and a build chain), and rather heavy (over 2 MB that your poor browser had to download and interpret for every test result).

So I came up with oculus. It mainly consists of a ~ 10 kB static results.html file which is trivial to deploy – just copy that file. It shows you all the important logs and artifacts, so that as a developer you have a better chance to understand what went wrong.

It’s quite simple and easily hackable, Miroslav already added some improvements to it. All the other files are for the cypress.io browser integration tests and ESLint static code checking.

Reproducing tests

My biggest gripe with gating tests has always been that they were really difficult to reproduce and investigate locally. TF does magic things behind the scenes: most importantly, the installation of the test artifact like the COPR build or bodhi package update that is being tested. But also some VM preparation like updating packages and applying various hacks.

With the old results viewer, none of that was even visible. Oculus at least

showed you the guest setup logs, but they still remained hard to discover or

apply to a local tmt run in a VM. The tests did

produce something called a tmt-reproducer.sh, but it was very incomplete:

# tmt reproducer

git clone https://github.com/martinpitt/python-dbusmock testcode

git -C testcode checkout -b testbranch 7b122ecc2fddd45af3bd241dc56fab6e4a7aa0db

cd testcode

curl -LO https://artifacts.dev.testing-farm.io/061e60db-d707-462c-936f-77c05616fca8/workdir-repository-None-HAQl4n/tmt-environment-plans-all.yaml

tmt -c trigger=commit -c arch=x86_64 -c distro=fedora-37 run --all --verbose -e @tmt-environment-plans-all.yaml provision --how virtual --image Fedora-37 plan --name ^\/plans\/all$

This was from a GitHub PR packit run, i.e. testing against a COPR build. Nothing in that reproducer would actually install the COPR and the package to be tested, so if you’d actually run that, the test would just fail early and yell “HAHAHAHAno!” at you.

The central project for that activity is gluetools-modules,

which implements all these steps like “run guest setup playbooks”, “install

COPR repo”, “invoke tmt”, and “build a results.xml”. Unfortunately that

project has a lot of historical baggage, so it is a lot bigger and

more complex than it ought to be, and I found it rather hard to understand and

get a feeling for. But after a few weeks I made some progress and eventually

landed a bunch of

improvements:

- The documentation now explains how to run a pipeline locally, which is a prerequisite to do any non-trivial development on this project.

- The code now fully works with Python 3.

- TMT tests can now declare additional logs and artifacts (see below)

- The COPR/koji installation steps were a lot more complicated than necessary.

tmt-reproducer.shnow includes the installation of COPR/koji artifacts. For the PR mentioned above, it looks like this:# tmt reproducer git clone https://github.com/martinpitt/python-dbusmock testcode git -C testcode checkout -b testbranch ca2a8d07d026f994406a2e7871f9f6e392f9c4b6 cd testcode curl -LO https://artifacts.dev.testing-farm.io/d1fe37a1-62b2-4f96-a3cf-a57c0e690586/workdir-repository-None-bc1b62r_/tmt-environment-plans-all-second.yaml tmt --context arch=x86_64 --context distro=fedora-37 --context trigger=commit run --all --verbose -e @tmt-environment-plans-all-second.yaml provision --how virtual --image Fedora-37 prepare --how shell --script ' curl https://copr.fedorainfracloud.org/coprs/packit/martinpitt-python-dbusmock-160/repo/fedora-37/packit-martinpitt-python-dbusmock-160-fedora-37.repo --retry 5 --output /etc/yum.repos.d/copr_build-martinpitt-python-dbusmock-160-1.repo dnf --allowerasing -y reinstall https://download.copr.fedorainfracloud.org/results/packit/martinpitt-python-dbusmock-160/fedora-37-x86_64/05088426-python-dbusmock/python3-dbusmock-0.28.6-1.20221206092207521496.pr160.11.gca2a8d0.fc37.noarch.rpm || true dnf --allowerasing -y install https://download.copr.fedorainfracloud.org/results/packit/martinpitt-python-dbusmock-160/fedora-37-x86_64/05088426-python-dbusmock/python3-dbusmock-0.28.6-1.20221206092207521496.pr160.11.gca2a8d0.fc37.noarch.rpm rpm -q python3-dbusmock-0.28.6-1.20221206092207521496.pr160.11.gca2a8d0.fc37.noarch ' plan --name ^/plans/all/second$

I fully acknowledge that this is very messy – my plan has always been to first make it work, and then make it pretty. At least after some research I now know exactly how to improve this – this has just been blocked for a while as the TF team is currently doing some major cleanup work and paying down technical debt on this project. So I will finish this up in January.

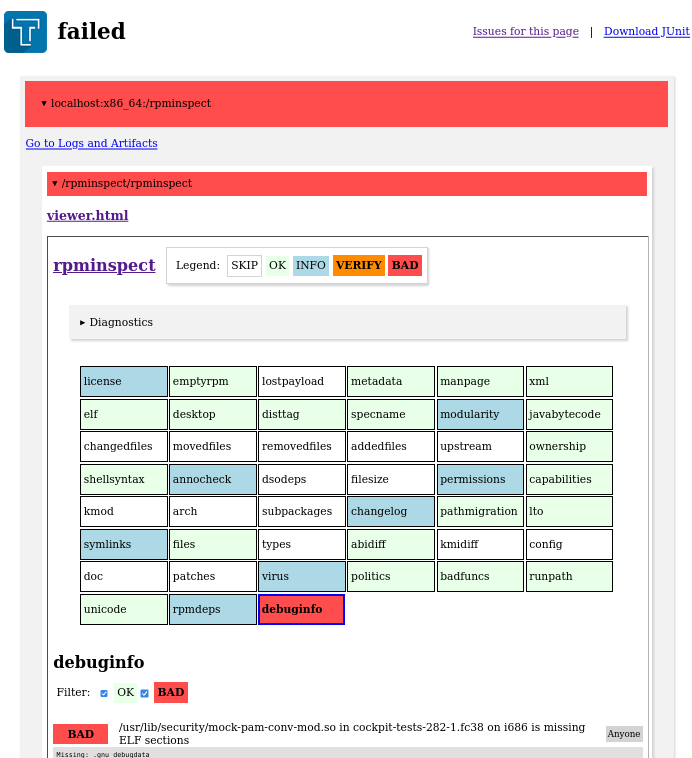

rpminspect reports

rpminspect analyzes new package uploads for generic issues like bad/missing licenses, ELF/library linker problems, broken shell syntax, bad dependencies, and so on. It runs for every Fedora and RHEL update.

The OSCI team was not happy about the rather complicated way how it was run, and the suboptimal presentation of the results.

So I first repeated my oculus exercise of creating a customized viewer for rpminspect results. Followed by a rather big Yak shaving exercise to clean up the rpminspect runner pipeline and use the custom viewer, in a way that is generic and useful for any other test.

Now they look like this. As the TF results will be cleaned up in a few months, here is a scaled down screenshot:

Custom test logs/artifacts

Your own TMT tests can have extra artifacts as well now, like custom viewers,

coverage reports, screenshots, or journal logs. tmt 1.18 introduced

creating a custom results.yaml,

the TF will plumb that through, and oculus will show them. Your plan’s

discover: has to declare result: custom like this:

discover:

how: shell

tests:

- name: mytest

framework: shell

test: run-tests.sh

# add this to enable a custom results.yaml with extra artifacts

result: customand your test needs to create $TMT_TEST_DATA/results.yaml like this:

- name: /mytest

result: pass|fail|error

log:

# the first entry must always be the test's output; shown inline in the viewer

- data/mytest/output.txt

- data/mytest/data/viewer.html

- data/mytest/data/screenshot.png

- data/mytest/data/journal.logThanks

I want to thank the OSCI and TF teams for their warm welcome; especially to Miroslav Vadkerti and Michal Srb for taking the time to explain stuff to me, discuss ideas, and review code changes. I had some fun, learned new things, and hope to have improved things a little.

Have some nice end-of-year holidays everyone!